Present Monolithic Architecture

Deadlines : 3/11, 5/11, 9/11, 15/11, 18/11 and final handin: 23rd November 2021.

Use application modernization to refactor the monolith architecture of SkyCave into a system based upon the microservice architectural style. Development of one selected REST based microservice for a part of the ecosystem, making service tests, and collaborating DevOps style with other teams by developing consumer driven tests (CDT) for their services. Updating the swarm and pipeline for the SkyCave system.

Ideally, we should have collaboratively designed a MS architecture for SkyCave. However, I have decided to dictate the seams/bounded context of the monolith, to lower the workload, and then put more emphasis on the execution of the splitting in this exercise.

Therefore I postulate that the SkyCave daemon should be split into the following set of services

So, as an example, instead of PlayerServant.digRoom() method calling the CaveStorage like

...

UpdateResult result =

UpdateResult.translateFromHTTPStatusCode(

storage.addRoom(p.getPositionString(), room));

it will instead call the CaveService something like

...

Result postResult = caveService.doPOSTonPath(...);

UpdateResult result =

UpdateResult.translateFromHTTPStatusCode(postResult.statusCode);

Also, I have decided to require the API's of the three new services to be REST based. Teams must (quickly, as outlined by deadlines below) exchange and negotiate the full API suggestions so teams can start developing CDTs.

I use the (rudimentary) API specification technique outlined in my FRDS book §7.7, and advice groups to do the same. However, if all groups agree, you may use the OpenAPI Initiative or similar REST documentation techniques - it is much more comprehensive, but I guess also a more work.

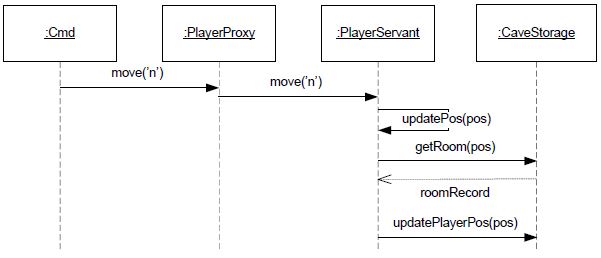

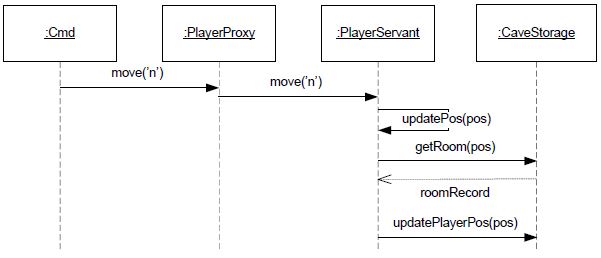

To given an impression of the strangling process, consider the PlayerServant.move() method. At the moment, it is implemented by modifying state in the underlying CaveStorage, as outlined by this sequence diagram:

Present Monolithic Architecture

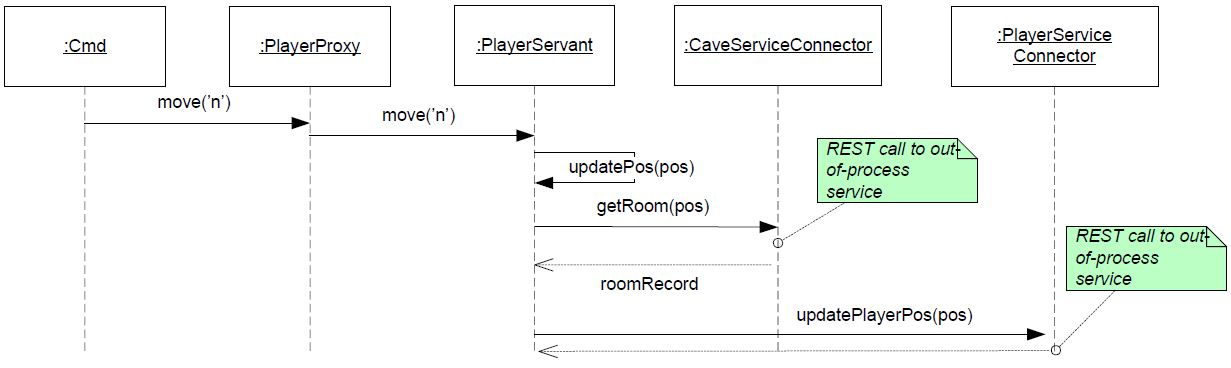

During this exercise move() will be required to

contact the CaveService and the PlayerService, to get and set

proper state.

Required Microservice Architecture

Each group is responsible for development and initial CDTs of one of the three microservices; and for modernization of the daemon by consuming the two missing microservices, supplied by two other teams/groups.

The assignment is as follows:

Group | Produce | Consumes from groups

-------------------------------------------------------

STRANGLER GROUP ONE

Alfa | CaveService | Bravo, Charlie

Bravo | MessageService | Alfa, Charlie

Charlie | PlayerService | Alfa, Bravo

STRANGLER GROUP TWO

Delta | MessageService | Henrik, Golf

Golf | PlayerService | Delta, Henrik

Henrik | CaveService | Delta, Golf

STRANGLER GROUP THREE

Echo | PlayerService | Lima, Henrik

Lima | MessageService | Echo, Henrik

Henrik | CaveService | Echo, Lima

-------------------------------------------------------

Your team will need to solve several exercises:

Design the REST API for your assigned microservice using FRDS notation, OpenAPI, or similar format, that the collaborating teams can accept (All groups must agree on a non-FRDS format, otherwise pick the FRDS format). Make sure to include paths, location, status codes, json structure, etc. Be sure to adhere to proper REST Level 1, not just do URI tunneling (i.e. use POST for creating stuff, GET for getting stuff, etc.)

Develop CDT's using Java and TestContainers (hard requirement), and develop your REST service (using any tech stack you want, C# or Python is fine), as well as a docker hub image whose storage tier is properly encapsulated behind an interface, and configurable to support either fake object storage or real NoSQL storage.

Remember that code reuse is considered bad practice across microservices. Thus, copying stuff from your existing code base as well as support classes from SkyCave is the proper way to do it. (You do not want to develop almost the same stuff again, right?) As an example, my own CaveService contains verbatim copies of classes like Point3, Direction, and my storage connector interface and implementions has vastly "stolen" already developed code from my original CaveStorage and own RedisCaveStorage code.

Provide your consuming groups (and me) with means to access your CDTs (like a bitbucket repository link or a Java source file + gradle library dependencies), the image containing your service (typically a 'fatJar' image/no source code), and document how to configure the service for either FakeObject or real NoSQL storage. Deadline: 15/11. You only need to support the fake object storage at this deadline.

Repositories need not be private for your service and CDTs, but keep your SkyCave itself private as in course one. See hints and advice below.

The next requirements can actually be ordered in any sequence or developed in parallel. However, be aware that there is quite a lot of effort involved!

Operation Checkpoint! Update you production compose-file for SkyCave daemon to the full deployment architecture, and deploy it on your production server/swarm. Note: You are free to 'forget' all old data.

Clarification: You may take two different approaches here.

The latter approach is arguably more DevOps. The latter path will also make the three SkyCave deployments be completely synchronized wrt. contents, as far as I can judge. A mix of the two approaches is also possible. Remember to document which approach you have taken in the report.

Optional and potential highly work-intensive exercise: Use the Nygard 'Trickle then batch' (or other suitable) pattern to migrate the live production system with no downtime.

Document the process and product by writing chapter 1 of the report template below.

Hand-in:

Evaluation:

Your report is evaluted pass/not pass initially (and with some ideas for improvement). The final report (also including the next two mandatory exercises) is finally evaluated along with the final oral defense for a final grade for this course.

Below some guidelines and some hints.

Remember the MicroService testing paradigm and the various test classes: CDTs, service tests, connector tests, etc. This is to your advantage: Develop your microservice service with a well designed interface to the storage layer, and just make a fake object implementation of it at the onset. This variant serves perfectly for your consumers to use during their SkyCave strangling. Next develop the storage connector using Fowler integration tests (connector tests) using a suitable storage engine (MongoDB, Redis, another NoSQL engine). Ensure your service is configurable using a dependency injection technique of your choosing (environment variables, command line parameters, CPF reading, ...), but inform your consuming groups of how to configure it. Newman principle: Create one artifact and manage configuration separately (Slideset W7-1).

Examples of configuration techniques and documentation are

orThe image

myrepo/caveservicedefaults to fake storage, but setting the env variable ‘REALSTORAGEHOST’ to the name of the host of a Redis on its default port, using e.g. ‘-e REALSTORAGEHOST=localhost’ in the docker run command, will make the cave service use Redis as storage layer, deployed on localhost.

The image

myrepo/caveserviceuses the CPF system, and provides three CPFs (fake.cpf, localhost.cpf, and swarm.cpf). 'fake.cpf' is default. Just provide the wanted cpf in the usual gradle way ala 'docker run -d myrepo/caveservice gradle service -Pcpf=localhost.cpf'. fake.cpf is a in-memory fake object storage, localhost.cpf connects to a MongoDB on 'localhost:27027', while 'swarm.cpf' assumes a MongoDB running on node 'cavemongo:27017' in a stack.

You may develop your assigned REST service using any technology stack you wish, but it should be containerized. The CDT's must be expressed in Java and TestContainers.

Beware of partial updates of REST resources. There is controversy about whether to use the PUT, POST, or PATCH verb. I advice using POST for partial updates, as recommended by Jim Webber in his "REST in Practice" book (and on Wikipedia): it is the easiest way and MSDO is not a course on all the gory details of REST.

You are required to model all three services by a Java interface, to be used by SkyCave's PlayerServant.

That is, do not issue HTTP requests directly from

within the PlayerServant code, but interact with, say, the

MessageService via a MessageService interface +

implementation.

This allows developing connector tests, as you did in the 'integration-quote-service' exercise solved earlier.

You may develop this interface right away for your own service, and wait with the two consumed services until you have been given access to an image to test on. However, you may also develop fake-object implementations for the consumed services based upon their initial API specifications, if you have the time and energy - this way you can very quickly start the strangling process, even before the producing teams have an external service ready.

Note: You can actually start the strangling before you do connector tests, if you like. Or do it in parallel.

Assume happy path: All services are up, are operational, do not fail, works, are happy! We will return to safe failure mode handling in the next mandatory exercise.

Your new SkyCave daemon development should still result in the same 'myrepo/skycave:latest' image that was used in the first course (that is, including source code, and with 'henrikbaerbak' as collaborator), but your compose file may refer to a 'fatJar' variant. Remember to have the old 'monolith' SkyCave lying in a branch, you may use that for other experiments in the third mandatory or in the project course.

The CPF system and ObjectManager can dynamically and without

any code changes handle new service types, if you stick to the

naming convention of the two properties for implementation

class and service host+port. Example from my own code base - I

have developed a cave service, whose 'connector java

interface' is named CaveService. So I can in my

PlayerServant's constructor assign the caveService instance:

// Create the internal cave service connector

this.caveService = objectManager.getServiceConnector(CaveService.class,

Config21.SKYCAVE_CAVESERVICE);

defined by the CPF string

public class Config21 {

public static final String SKYCAVE_CAVESERVICE = "SKYCAVE_CAVESERVICE";

}

which means the SkyCave factory will look for the following

properties in the provided CPF file:

# Solution to Mandatory 2.1 for CaveService using a

# CaveService REST server on localhost:9999

< cpf/http.cpf

SKYCAVE_CAVESERVICE_CONNECTOR_IMPLEMENTATION = cloud.cave.DONOTDISTRIBUTE.solution21.RealCaveServiceConnector

SKYCAVE_CAVESERVICE_SERVER_ADDRESS = localhost:9999

Take small steps. Integrate one service at a time, avoid 'BIG BANG REWRITING', and do strive to keep your test cases running all the time - even if it means take a somewhat longer route (read: write migration code that you know will be removed later in the process.)

Update! After working a bit further than present in the 'strangling example' document below I have discovered several suboptimal hints, so please update your copy to the latest one published 19-11-2021. Sorry for any misguidance! It is a bit of a challenging task, so if you end up in a code mess, you may have a look at my own UPDATED strangling example, in which I 'strangle in small steps' while keeping tests running. It also demonstrates the point about CPF and ObjectManager above.

The end product should be that CaveStorage is removed from the architecture, but it and its FakeCaveStorage double implementation may serve nicely as stepping stones, by letting it handle not-yet-integrated services. Example: PlayerServant refactored to use the MessageService, but room and player responsibilities still handled by CaveStorage.

You are not required to use TLS/HTTPS.

You may let your XService pick out the bearer token

in the Authorization header and invoke the

"/introspect" path of the cavereg.baerbak.com (as

outlined in the Authorization slide in the first course), to

verify that the given player is actually authorized to access

your service.

However, if you do (which is quite a bit more work, and testing becomes, uhum, difficult), allow external services to access without authorization (allow that the header is missing, and grant access anyway), to lower implementation burden on the consuming groups.